"When I think of open source, Linux is the core," says William Eshagh, a technologist working on Open Government and the Nebula Cloud Computing Platform out of the NASA Ames Research Center. Eshagh recently announced the launch of code.nasa.gov, a new NASA website intended to help the organization unify and expand its open source activities. Recently I spoke with Eshagh and his colleague, Sean Herron, a technology strategist at NASA, about the new site and the roles Linux and open source play at the organization.

Eshagh says that the idea behind the NASA code site is to highlight the Linux and open source projects at NASA. "We believe that the future is open," he says. Although NASA uses a broad array of technology, Linux is the default system and has found its way into both space and operational systems. In fact, the websites are built on Linux, the launch countdown clock runs on Fedora servers, and Nebula, the open-source cloud computing project, is Ubuntu based. Further, NASA worked with Rackspace Hosting to launch the OpenStack project, the open source cloud computing platform for public and private clouds.

Why is NASA contributing to open source? Eshagh says that NASA's open systems help inspire the public and provide an opportunity for citizens to work with the organization and help move its missions forward. But the code site isn't only about sharing with the public and making NASA more open. The site itself is intended to help NASA figure out how the organization is participating in open source projects.

Three Phase ApproachIn the initial phase, the code site organizers are focusing on providing a central location to organize the open source activities at NASA and lower the barriers to building open technology with the help of the public. Herron says that the biggest barrier is that people simply don't know what's going on in NASA because there is no central list of open source projects or contributions. Within NASA, employees don't even have a way to figure out what their colleagues are working on, or who to talk to within the organization about details such as open source licenses.

At NASA, the open source process starts with the Software Release Authority, which approves the release of software. Eshagh says that even finding the names of the people in the Software Release Authority was an exercise, so moving the list of names out front and shining a light on it makes it easier to find the person responsible. The new guide on the code site explains the software release guidelines and provides a list of contacts and details about releasing the software, such as formal software engineering requirements.

Phase two of the code project is community focused and has already started. Eshagh says there's a lot of interest in open source at NASA, including internal interest, but the open.NASA team is still trying to figure out the best way to connect people with projects within the agency.

Eshagh says that the third phase, which focuses on version control, issue tracking, documentation, planning, and management, is more complicated. He points to the Goddard General Mission Analysis Tool (GMAT), an open source, platform independent trajectory optimization and design system, as an example. There is no coherent or coordinated approach to develop software and accept contributions from the public and industry. "What services do they need to be successful? What guidance do they need from NASA?," Eshagh wonders. "We're trying to find best-of-breed software solutions online; GitHub comes to mind," he says.

Phase three also will include the roll out of documentation systems and a wiki, which Eshagh and his team want to offer as a service, but in a focused, organized way to help projects move forward. He says that NASA doesn't promote any project or product – they just want the best tool for the job. "We're taking an iterative approach and making information available as we get it and publish it," Herron says. They've already received a bunch of feedback about licenses, for example.

Measuring SuccessHow will the open.NASA team measure the success of the code site and their other efforts? "We're trying to build a community," Eshagh explains. "We've kind of tapped into an unsatisfied need for public and private individuals to come together."

He says they'll measure success by how many projects that they didn't previously know about come forward and highlight what they are doing. "People are actually reaching out and I think that's a measure of success," he says. Also, the quantity and quality of the projects and toolchains, as well as how many people use them, will be considerations.

Tackling Version ControlIn December, Eshagh announced NASA's presence on GitHub, and their first public repository houses NASA's World Wind Java project, an open source 3D interactive world viewer. Additional projects are being added, including OpenMDAO, an open-source Multidisciplinary Design Analysis and Optimization (MDAO) framework; NASA Ames StereoPipeline, a suite of automated geodesy and stereogrammetry tools; and NASA Vision Workbench, a general-purpose image processing and computer vision library.

In March 2011, NASA hosted its first Open Source Summit at Ames Research Center in Mountain View California. GitHub CEO Chris Wanstrath and Pascal Finette, Director of Mozilla Labs, were among the speakers. Eshagh says that at the event, he learned that when Erlang was first released on GitHub, contributions increased by 500 percent. "We are hoping to tap into that energy," he adds.

"A lot of our projects were launched under SVN and continue to be operated under there," Eshagh says. Now open.NASA is looking at git-svn to bridge these source control systems.

"A lot of projects don't have change history or version control, so GitHub will help with source control and make it visible and available," Herron adds. Since they've posted the GitHub projects, Eshagh and his team have already seen some forks and contributions back, but he says the trick is to figure out how to get the project owners to engage and monitor the projects or to move to one system.

Which NASA projects would Eshagh like to see added to GitHub? "We have so many projects, we don't have favorites," he says. "If this is a viable solution, increases participation, and makes it easier for developers to develop, then we'd like to see them there." He adds that his team would like to see all of NASA's open source projects have version control, use best practices, and be handled in a way that the public can see them.

2011 AchievementsAt the end of 2011, Nick Skytland, Program Manager of Open Government at the Johnson Space Center, posted the 2011 Annual Report by the NASA Open Government Initiative. His infographic says that there were 140,000,000 views of the NASA homepage; 17 Tweetups held with more than 1,600 participants; 2,371,250 combined followers on Twitter, Facebook, and Google+; and 50,000 followers on Google+ within the first 25 days.

"The scope and reach of our social media is not insignificant," Eshagh says. Herron points out that the nasa.gov site is the most visited US government site. He says that the community is very engaged. "People love to see our code," he adds. "People are excited about it." In fact, the open source team hopes to use their code to keep people excited about the space program.

NASA is now in an interesting phase, Eshagh explains. He says that after the space shuttle program ended last year, NASA Deputy Administrator Lori Garver was speaking to a group of students when one of them asked her whether she's now out of a job. (She's not.) The new code site helps illustrate how many projects are still active and growing at NASA. "We still have a lot of work to do and a lot of people are pulling for us," Eshagh says.

]]>

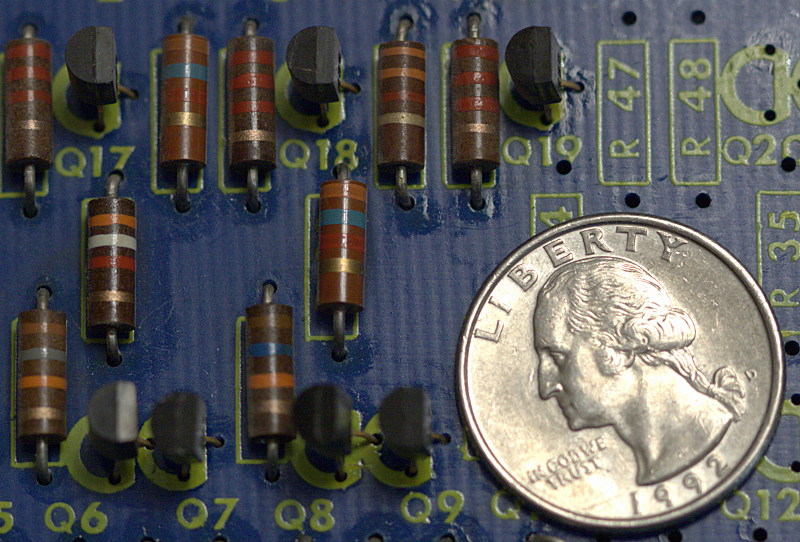

Talk is cheap, so take a look at Figure 3. This is an old circuit board from a washing machine. See the stripey things? Those are resistors. All circuit boards have gobs of resistors, because these control how much current flows over each circuit. The power supply always pushes out more power than the individual circuits can handle, because it has to supply multiple circuits. So there are resistors on each circuit to throttle down the current to where it can safely handle it.

Talk is cheap, so take a look at Figure 3. This is an old circuit board from a washing machine. See the stripey things? Those are resistors. All circuit boards have gobs of resistors, because these control how much current flows over each circuit. The power supply always pushes out more power than the individual circuits can handle, because it has to supply multiple circuits. So there are resistors on each circuit to throttle down the current to where it can safely handle it.

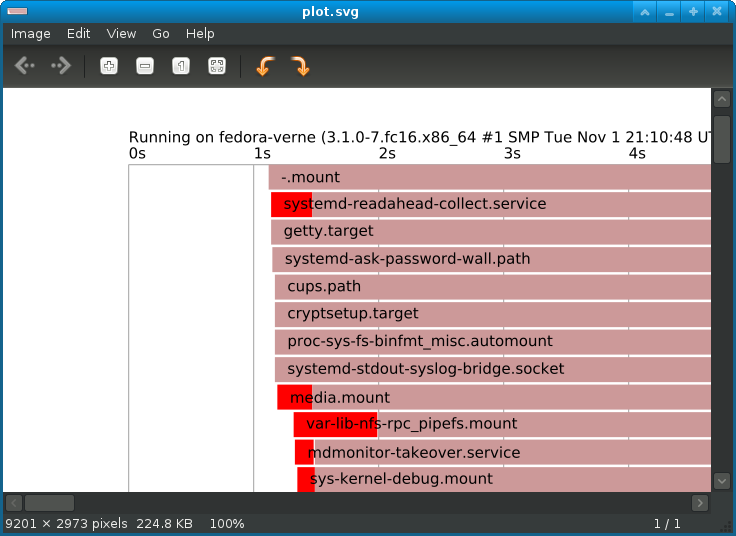

There isn't much we can do about BIOS times. Some are fast, some are slow, and unless you're using a system with

There isn't much we can do about BIOS times. Some are fast, some are slow, and unless you're using a system with  Use of Linux in the mobile/embedded space is exploding, and we find many companies are adopting the open source Yocto project to build custom embedded Linux systems. The project is hosting a free day of training on Yocto on Feb 14th as part of the Embedded Linux Conference. This is a fantastic opportunity to learn Yocto if you're a beginner or get more advanced if you are already familiar with the tool. Find out more about

Use of Linux in the mobile/embedded space is exploding, and we find many companies are adopting the open source Yocto project to build custom embedded Linux systems. The project is hosting a free day of training on Yocto on Feb 14th as part of the Embedded Linux Conference. This is a fantastic opportunity to learn Yocto if you're a beginner or get more advanced if you are already familiar with the tool. Find out more about