Right this very second, you are looking at a Web browser. At least, those are the odds. But while that's mildly interesting to me, detailed data on where users look (and for how long) is mission-critical. Web designers want to know if visitors are distracted from the contents of the page. Application developers want to know if users have trouble finding the important tools and functions on screen. Plus, for the accessibility community, being able to track eye motion lets you provide text input and cursor control to people who can't operate standard IO devices. Let's take a look at what open source software is out there to track eyes and turn it into useful data.

Right this very second, you are looking at a Web browser. At least, those are the odds. But while that's mildly interesting to me, detailed data on where users look (and for how long) is mission-critical. Web designers want to know if visitors are distracted from the contents of the page. Application developers want to know if users have trouble finding the important tools and functions on screen. Plus, for the accessibility community, being able to track eye motion lets you provide text input and cursor control to people who can't operate standard IO devices. Let's take a look at what open source software is out there to track eyes and turn it into useful data.

The categories mentioned above do a fairly clean job of dividing up the eye-tracking projects. Some are designed primarily for use in user-behavior studies, like you might find in a laboratory setting. Some are intended to serve as part of an input framework for people with disabilities. But even within those basic categories, you'll find plenty of variety and flexibility.

For example, there are eye-tracking projects designed to work with standard, run-of-the-mill Web cams (like those that come conveniently attached to the top edge of so many laptops), and those meant to be used with a specialty, head-mounted apparatus.

Many projects have a particular use-case in mind, but with the ready availability of Webcams, developers are exploring alternative uses suitable for gaming, gesture-input, and all sorts of crazy ideas. In addition, regardless of how you capture the eye-tracking data, it requires special software to interpret it in a useful fashion.

Tracking Eye Movement With a Webcam

On the inexpensive end of the hardware spectrum are those projects that implement eye-tracking using a standard-issue Webcam.

OpenGazer is by far the simplest such project to get started with. The code is developed as an academic research effort, which has the unfortunate side effect of making public releases sporadic. The tarball linked to from the project's home page is a couple of years old, however there is much newer code available on GitHub, along with compilation and installation instructions.

OpenGazer is licensed under GPLv2, and includes a Python application called HeadTracker that tracks head motion in order to narrow down the field of vision that OpenGazer watches for eye movement. Any USB Webcam supported by Linux will work.

OpenGazer is licensed under GPLv2, and includes a Python application called HeadTracker that tracks head motion in order to narrow down the field of vision that OpenGazer watches for eye movement. Any USB Webcam supported by Linux will work.

Two more offerings are designed to work with USB Webcams, but they're both written for Windows. The licenses do permit them to be adapted to Linux and other OSes, however. Gaze Tracker is a GPLv3 tool with a GUI and a built-in calibration aide. It supports both video and infrared Webcams.

TrackEye is licensed under the Code Project Open License, which seems to be unique to the author's hosting site. Notably, the only restrictions it imposes are not on the reuse or redistribution of the software, but on altering the accompanying documents. Given the choice, a standard, accepted license is always preferable, but TrackEye may be worth studying.

Tracking Eye Movement with Specialty Equipment

There are two open source eye-tracking tools that require specialty headgear -- akin to a glasses frame with a miniature Webcam attached, aimed at the eyes. It may not look cool, but the restriction saves CPU cycles by ensuring that the wearer's eyes are always in-frame, and no code is required to first locate the eye before tracking the movement.

openEyes is a project that produces three separate tools. cvEyeTracker is a standalone, real-time eye-tracking application, built on top of the OpenCV computer vision library. However, it's designed to function with two video cameras attached, and appears to expect both of them to be Firewire. Visible Spectrum Starbust is a tool for picking up eye movement in a video file recorded separately, which may be a simpler solution for those without access to the Firewire hardware needed by cvEyeTracker.

The third package, Starburst, is a stand-alone pupil-recognition tool; the algorithm is the same one used by both of the other applications — it is just packaged separately for easier re-use. All of the openEyes code is licensed under GPLv2. The project also includes plans for building the video capture hardware used by the applications.

The EyeWriter project is an effort to build a usable eye-movement input system for a user with paralysis. However, the code it has produced is general-purpose enough to be used for other projects as well. It is designed to work with the Playstation Eye, an off-the-shelf component akin to the more widely known Microsoft Kinect.

Eye-Driven Input and Pointer Control

Using eye-tracking software as an accessibility tool (as the EyeWriter project does) includes not only identifying the iris and pupil in a video image, but translating the motion that it detects into the desktop environment's input system.

GNOME's MouseTrap is a component designed to do this. Thanks to its integration with the GNOME desktop environment, it is relatively easy to get started with (in fact, many distributions already package it). However, MouseTrap does rely on an older version of OpenCV. There is a patch to update the code for the latest changes, but GNOME 3 is still in the process of updating the usability tools found in GNOME 2.x, so you could experience other incompatibilities.

eViacam is a newer project – still actively developed – that uses head-tracking to move the mouse pointer. In October 2011, the GNOME project discussed the possibility of using eViacam as a modern replacement for MouseTrap, but decided that for the time being, it was not a good fit due to its non-GNOME dependencies.

SITPLUS is a multi-input system that supports eye tracking as well as motion capture through Nintendo Wii remotes and several other mechanisms. It is a GPLed framework for designing interactive applications – the project's main goal appears to be promoting activity for people with cerebral palsy and other motor impairments, but it has other potential uses as well.

OpenGazer (mentioned in the Webcam section above) includes a facial-gesture recognition engine that can also be used as an input system for the Dasher gesture-driven text input system; although here again you may have to do some work to integrate it with your system.

Finally, there are two hardware-centric projects worth mentioning. The Eyeboard is an inexpensive hardware device that uses electro-oculography (detecting eye movement with electrodes, rather than through video pattern-recognition) and a special text input frame to allow users to type by focusing on the monitor.

The Eye Gaze is a person-to-person communication device that uses a "window frame" to track the letters and numbers selected by the user. As the wiki article explains, however, commercial devices like the Eye Gaze are often expensive — but they do not have to be, and their simplicity makes them easily usable with ordinary Webcams.

Processing Eye-Movement Data

In contrast, the "usability study" use case for eye-tracking data requires software to map the eye movements — so that they can be overlaid on a site or application design to follow eye motion, or to generate a heat-map of what regions capture the most attention. Currently there appears to be no eye movement analysis or visualization software developed for Linux, however there are some successful tools for other platforms that could form the basis for viable ports.

CARPE (for Computational and Algorithmic Representation and Processing of Eye-movements) is a GPLv3 library for visualizing eye motion data. It can creator contour maps, heat maps, cluster plots, and several other visualizations, and can overlay the data onto video for easier analysis. It is Windows-only at the moment, although it uses OpenCV under the hood.

OGAMA (for Open Gaze And Mouse Analyzer) is another Windows-based toolkit. It is also GPLv3, and it is written in C#. It includes a live eye-motion recording component in addition to its analysis and visualization components. The software can process raw eye tracking data to locate points of interest, calculate statistics for external analysis, and create several visualization.

RITcode is an eye-motion analysis framework developed by the Rochester Institute of Technology's Visual Perception Lab. It is developed for Mac OS X, although the code base has not been updated in some time.

Several utilities with good reputations have, for whatever reason, been made available under awkward or incompatible licensing terms. For example, Oleg Komogortsev's eye-movement classification tools at Texas State University. Dr. Komogortsev says he wants them to be available to the community, but they are only usable by requesting a password directly from the researchers.

A similar situation exists for the Eye-Tracking Universal Driver and the MyEye project, both of which are only available under non-specific "freeware" terms. For all practical purposes, these licensing situations restrict the software's usage considerably, and will continue to do so unless the authors have a change of heart and adopt standard licenses.

Looking Ahead

For Linux users, then, the eye-tracking marketplace is a bit of a mixed bag. There is plenty of "raw material" — including eye-movement capture software, frameworks for using eye motion as input, and algorithms for analyzing and visualizing motion data. The trouble is that most of it is either developed only for Windows, or it is maintained as a stand-alone project that makes integrating with other software difficult.

This does not mean that the situation is dire, however. The GNOME accessibility team, for example, is still pursuing eye-tracking at its hackfests as well as exploring several of the independent project mentioned above. Not too long ago, that included meeting up with the aforementioned OpenGazer project, among others.

What is less clear is where Linux fans can collaborate with the data analysis and visualization projects. The biggest users of such technology is in the human-computer interaction (HCI) and UI design communities, which constitute a small group within the larger Linux universe. Still, there is clearly enough knowledge out there -- and license-compatible software available — that an interested party could pick up the pieces and assemble a high quality, open source solution. The rise in popularity of the Microsoft Kinect (particularly the OpenKinect free software drivers) could reinvigorate interest in eye-tracking, to everyone's benefit.

]]>

This post was made using the

Auto Blogging Software from

WebMagnates.org This line will not appear when posts are made after activating the software to full version.

OpenGazer is licensed under GPLv2, and includes a Python application called HeadTracker that tracks head motion in order to narrow down the field of vision that OpenGazer watches for eye movement. Any USB Webcam supported by Linux will work.

OpenGazer is licensed under GPLv2, and includes a Python application called HeadTracker that tracks head motion in order to narrow down the field of vision that OpenGazer watches for eye movement. Any USB Webcam supported by Linux will work.

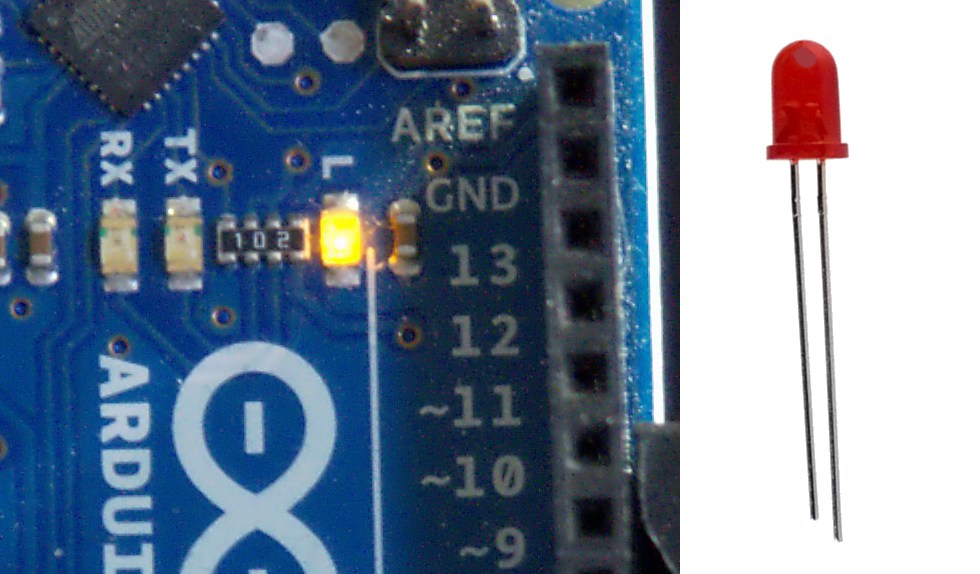

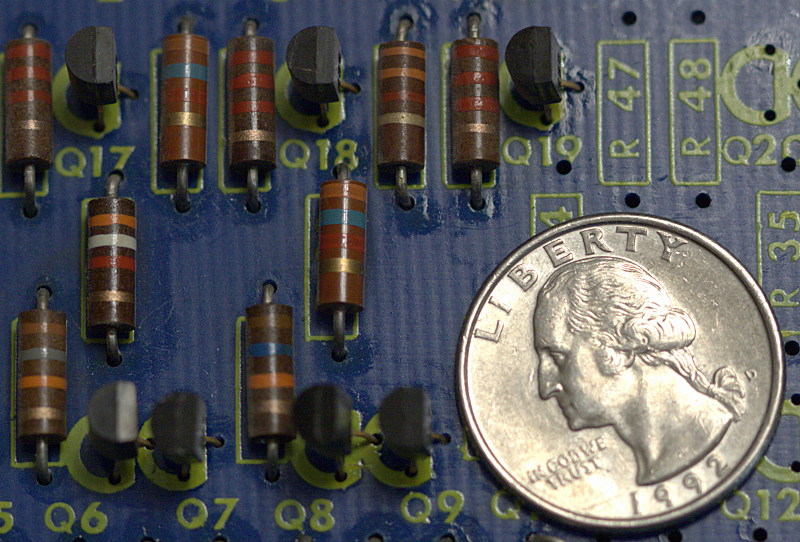

Talk is cheap, so take a look at Figure 3. This is an old circuit board from a washing machine. See the stripey things? Those are resistors. All circuit boards have gobs of resistors, because these control how much current flows over each circuit. The power supply always pushes out more power than the individual circuits can handle, because it has to supply multiple circuits. So there are resistors on each circuit to throttle down the current to where it can safely handle it.

Talk is cheap, so take a look at Figure 3. This is an old circuit board from a washing machine. See the stripey things? Those are resistors. All circuit boards have gobs of resistors, because these control how much current flows over each circuit. The power supply always pushes out more power than the individual circuits can handle, because it has to supply multiple circuits. So there are resistors on each circuit to throttle down the current to where it can safely handle it.